Loopy Features

This page includes examples of the major features of loopy, and examples of how they may be used to answer your scientific questions. For a more exhaustive list click here.

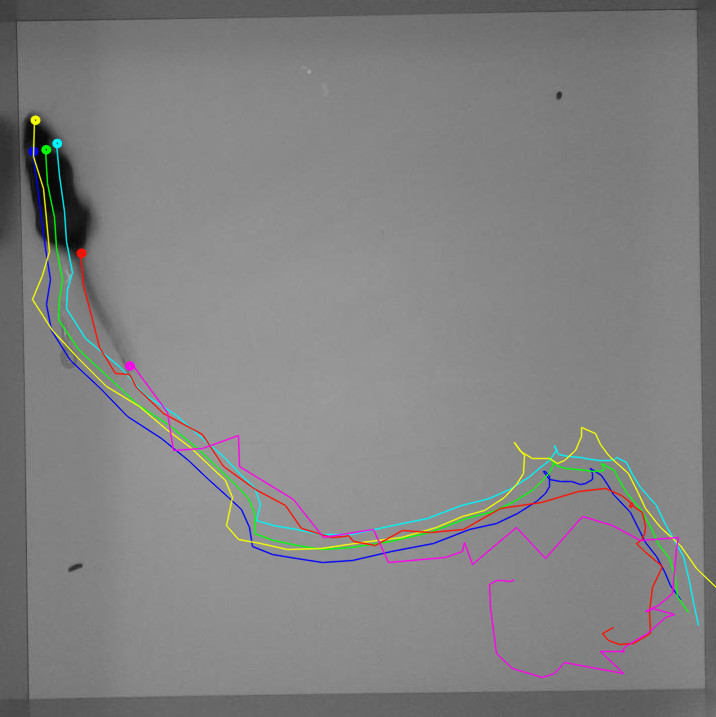

Pose and Body Part Tracking

Pose tracking (also commonly referred to as multi-point or body-part tracking) allows tracking of multiple user-defined parts of an animal or animals. This allows you to precisely quantify the parts of the animal that matter most to your science.

Loopy guides you through the process of creating and testing you custom pose detector.

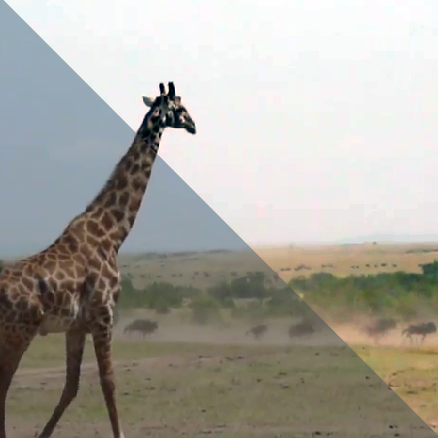

Works on Any Animal

Loopy tracking is not restricted to mice or other common species. The powerful deep learning facilities mean that creating a solution for your experimental paradigm, measuring only what you wish to measure, takes no more than a few hours (a one-time process). Once created, you can track an unlimited number of your videos.

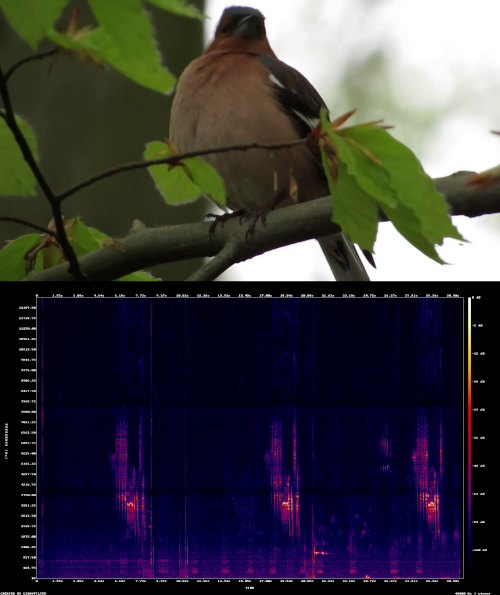

Animal Tracking in Natural Environments Using Deep Learning

Do you need to detect and track animals in a video? Have conventional image processing techniques been insufficient for your needs due to difficult or changing video conditions? Deep learning approaches have taken computer vision by storm and at loopbio we make them available to you, creating state of the art deep learning based object detectors customized to your scientific needs.

Conventional image processing requires tedious hand tuning of parameters and strong visual differences between the animal and the background, usually resulting in poor solutions. In the meanwhile, deep learning models learn how to locate the animals when provided with a small fraction of the videos annotated. Our solution empowers comfortable and efficient video annotation, includes in-house improvements to tackle problems like crowding and varying light conditions, and works well even on challenging recordings, like those obtained in natural environments.

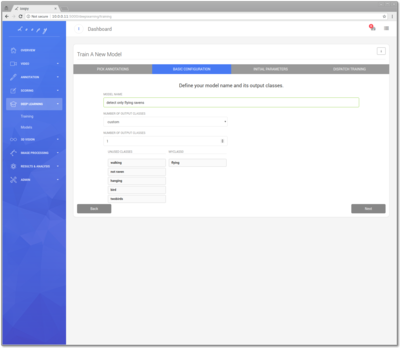

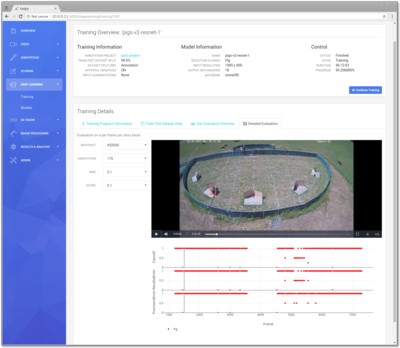

Guided Creation of Custom Detectors

Loopy features a simple step-by-step wizard for creating your own object detectors. Without specialist training you can configure your own deep learning model and start training it on your annotated data.

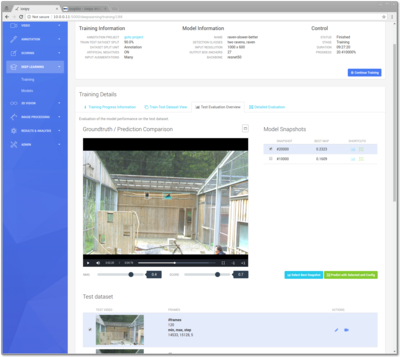

Not sure when you have annotated enough videos? Loopy includes sophisticated feedback for evaluating the performance of your custom detector on videos; both quantitative feedback such as the algorithm precision and loss, but also qualitative display of how well it detects the objects in real videos you have annotated. Loopy is careful to follow best-practices by not testing on video segments on which it was trained.

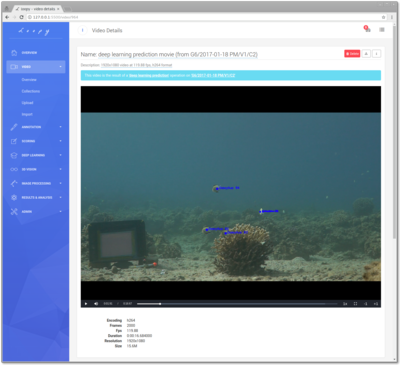

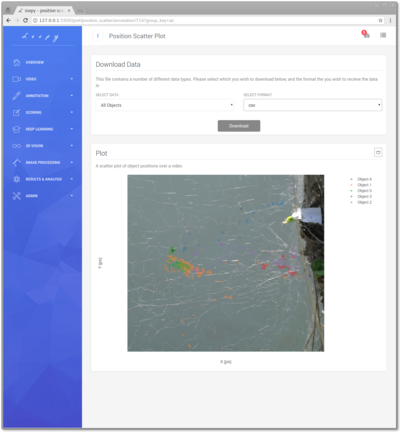

Use Your Custom Detector to Track in Videos

Once your model has been trained and you have decided that it's performance is sufficient for your needs, you can use it on your normal videos. Using your detector, Loopy can find all objects in your videos. These results can then be downloaded or analyzed in loopy using our analysis and plotting tools.

Tracking Single or Multiple Animals in Videos

Loopy includes powerful single and multiple subject tracking algorithms, with more added all the time. If you are unsure about the correct algorithm to use, Loopy includes a novel 'tracking wizard' which helps set various algorithm parameters to their optimum values.

Tracking of multiple individuals with identity. Video Credit: Helder Hugo

Tag and Barcode Tracking

Tag (also known as 2D barcode) tracking provides an efficient and robust way to track multiple individuals, with identity, for extended periods of time. Tags can be printed and detected down to as small as 5x5 pixels in size (depending on camera resolution), and can be attached to diverse species. Loopy provides several different tag types and sizes.

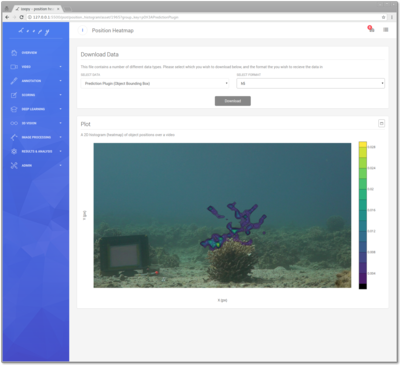

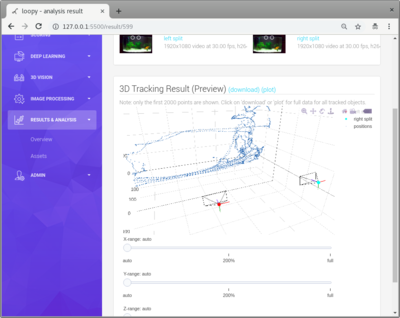

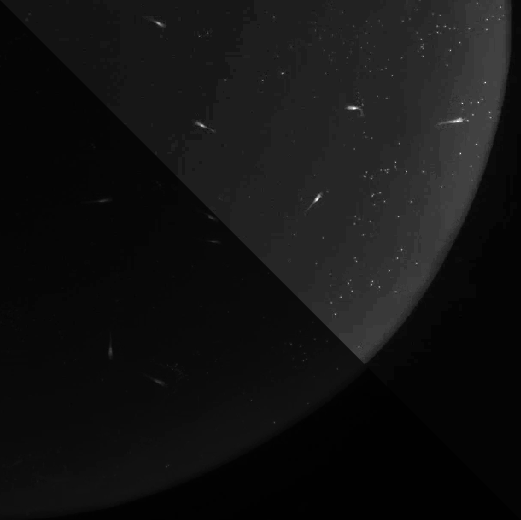

3D Tracking of Animal Movement

The real world is not restricted to two dimensions, and neither should your science be. Animals move in three dimensions and loopy lets you track the position of animals or their parts in 3D, both in and out of water. Many other features of loopy also work seamlessly with 3D tracking including:

- Train your own deep learning detectors for tracking multiple body parts

- Combine manual annotation of points with automated tracking results to reconstruct positions in 3D

- Allow alignment and scaling of 3D results to your defined laboratory coordinate system

- Analysis of 3D positions including velocity, position and acceleration histograms

- Continuous monitoring of 3D tracking quality using reprojection and tracking errors

- Raw data can be downloaded in a csv or h5 format for further analysis

Everything in One Place

Loopy has all the tools needed for 3D vision, from synchronization of multiple videos, creating a custom detector for the body-parts or object you wish to track, through to plotting and visualization of 3D data.

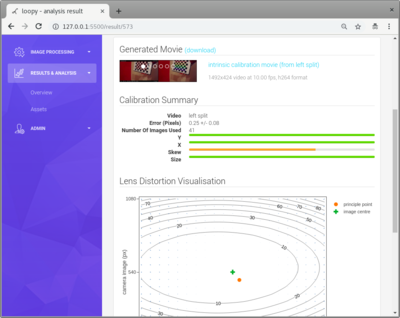

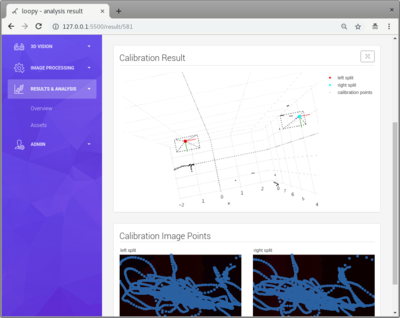

Camera Calibration

Camera calibration is a delicate yet difficult aspect of 3D tracking. Loopy provides tools for intrinsic calibration and extrinsic calibration of cameras and camera systems. It also includes helpful visualizations which allow you to evaluate the quality of your calibrations before using them for 3D tracking and reconstruction.

Versatile

Loopy supports camera arrangements ranging from stereo setups to large multi-camera installations with more than 10 cameras. It works both indoors and outside, in the lab and in the field.

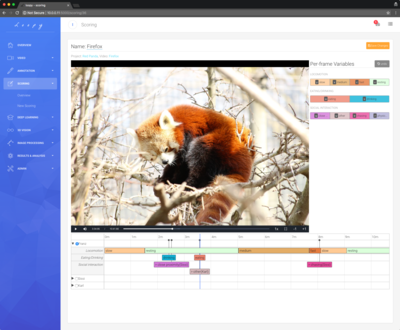

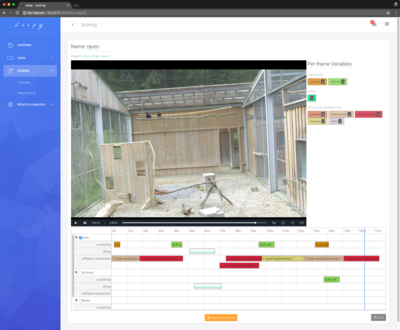

Scoring and Coding of Subject Behavaiour

Many studies utilize manual coding of videos in order to record the behavior of subjects in videos. Loopy includes a powerful modules which lets you code behavior efficiently and flexibly from the comfort of your web browser.

- Code an unlimited number behaviors from one or more videos

- Play back videos at 1x - 8x real speed, and single frame step forwards and backwards

- Configure extensive and customized keyboard shortcuts - you can code an entire video without touching the mouse

- Supports modifiers for behaviours and multiple coding types (focal, ad libitum, etc)

- Includes a powerful social scoring module allowing you to code any behavior as the interactions between subjects

- Behaviors may be events (single point in time) or durations (have a start and end time)

- Can score synchronized videos - multiple views from multiple cameras - of the same scene at once

Efficient Coding Interface

The coding user interface is being continually refined to improve the user experience and coding efficiency. Notable features for high-efficiency coding include:

- Unlimited Undo. If you make any coding mistake you can go back in time and correct it

- Dual-monitor support. You can 'pop-out' the video player in order to maximize the size of the video, and to see the entire coding sheet at once (great for large ethograms).

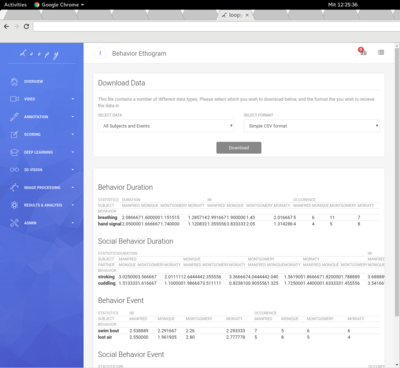

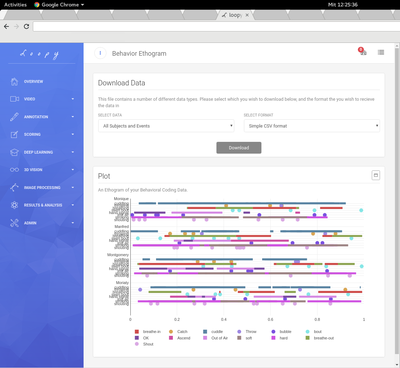

Powerful Analyses

Many powerful analyses types are available within loopy, such as behavioral ethograms per video or subject, robust summary statistics for both social and non-social behaviors, and much more. If you have an existing analysis in R, Python, or excel, the raw data can be downloaded in a number of formats.

Comparison of coding between different observers is also available. Combined with the the video management features, this makes Loopy a perfect tool for managing a large coding project with several different coders.

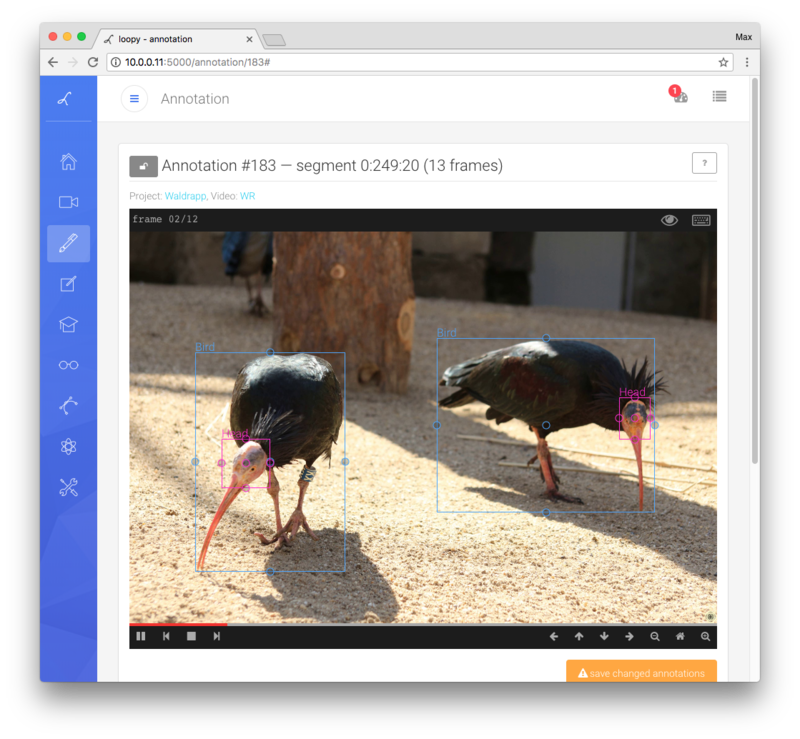

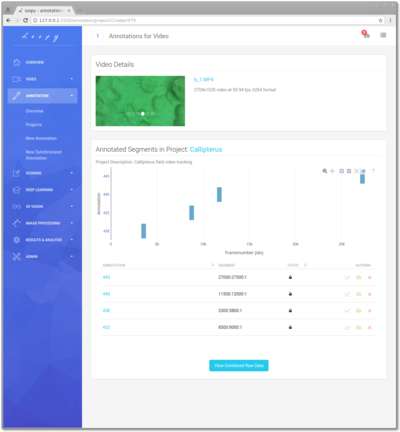

Annotation of Subject Positions and Attributes

Deep learning needs training data show the location of objects or object attributes. Our web-based annotation tool lets you collect rich data from videos in a comfortable way. If you are an AI researcher then Loopy could be the perfect solution to your ground-truth video data needs. If you are a scientist who wishes to code spacial attributes from videos then the annotation module of Loopy allows you to do so. If you are intending to use Loopy for training your own object detector then you will use the annotation module for this task. The annotation tool allows you to

- Annotate points and boxes in videos (polygons coming soon)

- Assists selecting appropriate temporal resolution to annotate

- Attach modifiers to all annotated objects (difficulty score, occlusion, custom tags, etc)

- Guided / predictive box annotation - Loopy minimizes the amount of annotation work by sensible and configurable prediction and interpolation of annotated objects between frames

- Powerful keyboard shortcuts, mouse-free annotation possible

- Raw annotation data available in industry standard (h5, VOC-XML, etc) formats

- Annotation statistics, coverage, size, progress are shown

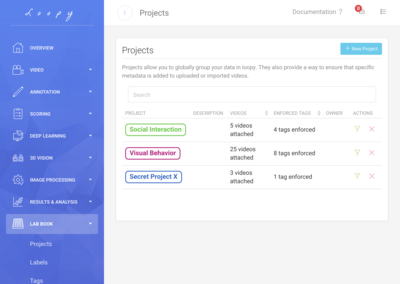

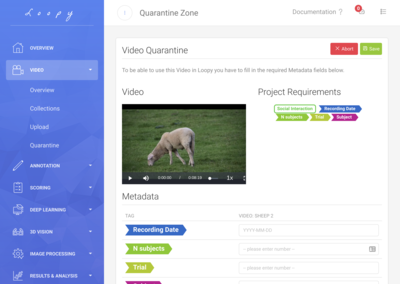

Video and Metadata Management

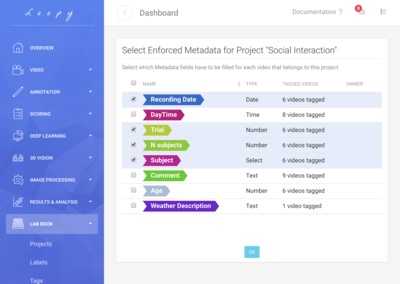

Managing large sets of videos can be a challenging task. Videos can come from a variety of sources and it can be easy to mislabel or confuse similar data. Loopy includes powerful features for attaching structured metadata to videos and then using this metadata for organization and navigation throughout the site.

For example, you can define a project which requires entering the genotype, treatment, age, or any other label or tag you define, to all uploaded videos.

When uploading a video you can choose to associate it with a project. In this case, Loopy ensures that you have filled out all the required metadata before making the video available for analysis.

Compulsory metadata enforcement can help with license, regulatory, or experimental protocol compliance by ensuring all necessary information is collected. If you have a large number of users or an on-site instance then it can be used to ensure all members of your research group adhere to the same naming, reporting and data management standards.

Image Processing and Video Editing

Loopy comes with a number of general purpose image processing algorithms including slow-motion and summary video generation, optical character recognition, color and white-balance correction and much more. These operations can be used to pre-process videos before tracking, or as stand-alone tools.

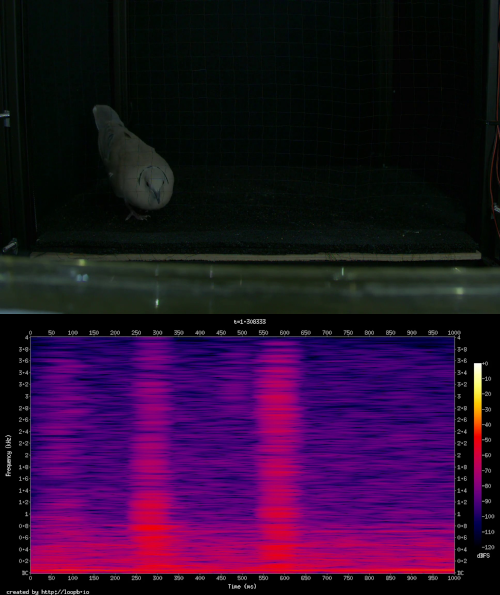

Audio Visualisation

Loopy can create visualisations of the audio channel/s of your videos. These visualisations can then be used in the behavioral coding module to assist with coding subject behavior. Available audio visualisations include:

- Audio waveform display

- Animated (scrolls horizontally synchronized with the video) or static spectrogram

- Animated volume histogram

- Power spectrum (audio frequency vs. amplitude)

loopbio / back to loopy

loopbio / back to loopy